Stationary iterative methods based on matrix splittings, such as Gauss-Seidel and symmetric successive over-relaxation (SSOR), are well understood for solving linear systems

where

The convergence rate is determined by the spectral radius of

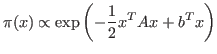

We investigate how this technology can be transferred to the problem of constructing efficient Markov chain Monte Carlo (MCMC) methods to sample from high dimensional Gaussian distributions,

where the matrix

where

The Gibbs sampling algorithm corresponds to the Gauss-Seidel and SSOR iterative solvers, as studied by Fox and Parker 2013.

Other sampling methods also correspond to matrix splittings, and we use this correspondence to help us understand the convergence properties of these sampling methods. For example Crank-Nicolson algorithms, described in Cotter et al. 2013, can be expressed as matrix splittings.

The Metropolis-Hastings algorithm is a popular method for constructing

MCMC methods, where the accept/reject step in the algorithm is used

because a proposal must be corrected so that the Markov chain converges

to the desired target distribution. For Metropolis-adjusted Langevin

(MALA) and Hamiltonian/hybrid Monte Carlo (HMC) proposals we can express

the proposal as a matrix splitting of an ``effective'' precision matrix

![]() . The difference between the target precision matrix

. The difference between the target precision matrix ![]() and the proposal precision matrix

and the proposal precision matrix ![]() is what the

Metropolis-Hastings algorithm corrects. We explore the consequences of

the matrix splitting correspondence for these proposals, in particular

the opportunity for using polynomial acceleration to construct more

efficient sampling algorithms.

is what the

Metropolis-Hastings algorithm corrects. We explore the consequences of

the matrix splitting correspondence for these proposals, in particular

the opportunity for using polynomial acceleration to construct more

efficient sampling algorithms.