We describe an application of physics-based preconditioning [L. Chacón, L., Phys. Plasmas 15, 056103 (2008)] to a nonlinear initial-value extended MHD code using high-order spectral elements for spatial discretization.

HiFi is a 2D and 3D nonlinear fluid simulation code, written in Fortran 95, with principal emphasis on extended MHD and magnetic fusion energy. The code is separated into a large solver library and a much smaller application module which links to the library, using flux-source form for the physics equations. Realistic nonlinear and time-dependent boundary conditions have been developed.

Spatial discretization uses high-order ![]() spectral elements on a

curvilinear grid. Grid cells are logically rectangular, with spectral

elements a Cartesian product of 1D polynomial modal basis functions.

Time discretization uses fully implicit Newton-Krylov method with

adaptive time steps for efficient treatment of multiple time scales.

spectral elements on a

curvilinear grid. Grid cells are logically rectangular, with spectral

elements a Cartesian product of 1D polynomial modal basis functions.

Time discretization uses fully implicit Newton-Krylov method with

adaptive time steps for efficient treatment of multiple time scales.

Computer time and storage are dominated by solution of large, sparse, ill-conditioned linear systems, arising from the implicit time step and multiple time scales. Static condensation is used to eliminate amplitudes of higher-order spectral elements in terms of linear elements. HiFi is built on the PETSc library [http://www.mcs.anl.gov/petsc/petsc-as/index.html] for efficient parallel operation and easy access to many advanced methods for linear and nonlinear system solution.

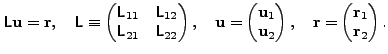

To achieve a weakly scalable parallel solution, we apply physics-based preconditioning, in which the physical dependent variables are partitioned into two sets as the basis of further reducing the order and increasing the diagonal dominance. In visco-resistive MHD, set 1 consists of density, pressure, magnetic flux function, and current, while set 2 consists of the momentum densities, The linear system is expressed in block form as

|

|

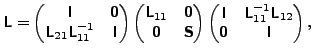

The principal difficulty is that, while the block matrices

![]() matrices are sparse, the presense of

matrices are sparse, the presense of

![]() makes

makes ![]() matrix dense. To avoid this, we approximate

matrix dense. To avoid this, we approximate ![]() by reversing the

order of discretization and substition, giving it the form of the

well-known ideal MHD force operator. This leads to the approximate

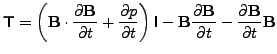

Schur complement

by reversing the

order of discretization and substition, giving it the form of the

well-known ideal MHD force operator. This leads to the approximate

Schur complement

|

|||

The matrices

![]() and

and ![]() which must be solved have reduced

order and are more diagonally dominant than the full Jacobian. They are

further reduced by static condensation and then solved by GMRES,

preconditioned by additive-Schwarz blockwise LU. This physics-based

preconditioning is then followed by Newton-Krylov iteration on the full

nonlinear system, using matrix-free GMRES. The convergence rate is

measured by the number of KSP iterations required for the block solves,

reflecting the condition number of the preconditioning matrices, and by

the number of iterations in the final Newton-Krylov solve, reflecting

the accuracy of the approximate Schur complement. Inaccuracy in the

Schur complement influences the rate of convergence but not the final

solution.

which must be solved have reduced

order and are more diagonally dominant than the full Jacobian. They are

further reduced by static condensation and then solved by GMRES,

preconditioned by additive-Schwarz blockwise LU. This physics-based

preconditioning is then followed by Newton-Krylov iteration on the full

nonlinear system, using matrix-free GMRES. The convergence rate is

measured by the number of KSP iterations required for the block solves,

reflecting the condition number of the preconditioning matrices, and by

the number of iterations in the final Newton-Krylov solve, reflecting

the accuracy of the approximate Schur complement. Inaccuracy in the

Schur complement influences the rate of convergence but not the final

solution.

Future efforts will be devoted to weak scaling tests on large parallel computers; exploration of other methods of solution for the reduced preconditioning equations; and extending the approximate Schur complement to include two-fluid effects.